In a previous post we have seen that Infrastructure as Code is a way of managing the infrastructure and the cloud resources, consisting in a set of processes and best practices.

But there is also a need for a set of tools, and this post will offer an overview of the most used tools in the industry. Most of them are free, open source tools, matched by a vendor-supported version that requires a license or a subscription. There are also SaaS versions that offer a free tier.

I'm describing the following tools in this post:

- Version Control Systems

- Automation tools (Ansible, Terraform)

- Accessory tools for "scaffolding" (Vault, etc).

But, before we explore the tools, just a few more words about the process.

Programmable infrastructure

The simple fact that the infrastructure is programmable via the API it exposes, does not mean that anyone can come and change its configuration and/or state.

We don't want anarchy, and even less we want that programmers do whatever they like bypassing the owners of the target technology domain. The administrators, that are also the SME (subject matter experts) have the responsibility to ensure the reliability, performance and security of the system and cannot afford that a naive developer compromises it.

So what we mean with treating the infrastructure as code is applying the same processes, and same tools, as we do with the source code of the applications. The infrastructure provisioning and configuration should follow the same process that we implement for the applications: write the code, version the code, test it statically for quality and security, deploy it automatically, test it dynamically (functional, performance, reliability and security tests), then deploy it in the production environment. Generally, it happens within a CI/CD pipeline with a good level of automation (but the same sequence could also be executed manually).

Now that we have agreed on the basic principles, let's have a look at the tools.

Version Control Systems

Version control is a system that records changes to a file or set of files over time so that you can recall specific versions later.

The purpose of version control is to allow software teams track changes to the code, while enhancing communication and collaboration between team members. Version control facilitates a continuous, simple way to develop software.

Since we want to manage the infrastructure as developers do with the software applications, we use the same organization for the files describing the desired state of the infrastructure (remember, infrastructure includes physical, virtual and cloud resources).

A central repository is the single source of truth. Local working copies can be used to evolve the code, to create new versions and test them. After validation, a new version is committed in the VCS (version control system). The most used tools are github and gitlab, but there is a large choice available.

Pipeline orchestrators

When a new version is created, a number of activities must take place: they can be executed manually or, better, automated to increase speed and reduce vulnerability to human errors.

If any task or test fail, a notification is sent to the right stakeholder to solve the problem and the pipeline aborts. A new pipeline cycle will restart after the issue has been fixed. You might build a single pipeline for the infrastructure and for the application deployment, or more often separate them in distinct processes: depending on the work organization and the availability of resources, there is no strict need to rebuild the target environment every time a new application version is released.

Orchestrators for CI/CD pipelines can be open-source or commercial products or can be engines incorporated in the version control system.

The following picture shows an example of pipeline:

Automation tools (Ansible, Terraform)

Those are not the only tools available for automation, but they are by large the most used.

They access the target system (infrastructure, cloud and components in the software stack) remotely, with no need for a local agent.

Generally the target API are wrapped in plugins for the automation engine (called Ansible modules and Terraform providers) that are either built and supported by the vendor of the target technology, or by the open source community.

Ansible was born for managing servers, so its approach is more orientated towards configuration management. Terraform excels at provisioning resources, and brings you to concepts like immutable infrastructure (see below).

Both the tools are great and let you define the desired state of the system, making sure that the current state matches the desired state. If it does not, changes are executed automatically by destroying configuration items and recreating them as they need to be. Indeed, Ansible tolerates changing the configuration of existing resources, in that it is more procedural than declarative.

Immutable Infrastructure

An approach to managing services and software deployments wherein components are replaced rather than changed. They are effectively redeployed each time any change occurs.

Traditionally, an application or service update requires that a component is changed in production, while the complete service or application remains operational. Immutable infrastructure instead relies on instancing "golden images", where components are assembled on computing resources to form the service or application.

Once the service or application is instantiated, its components are set - thus, the service or application is immutable, unable to change. When a change is made to one or more components of a service or application, a new golden image is assembled, tested, validated and made available for use. Then the old instance is discontinued, to free the computing resources within the environment for other tasks.

You can find a very good description and reusable examples at this two websites:

Immutable Infrastructure with Ansible, Packer and Terraform on Azure - https://devopsand.beer/2022/03/26/immutable-Infrastructure-with-ansible-packer-and-terraform-on-azure/

Immutable Infrastructure Using Packer, Ansible, and Terraform - https://medium.com/paul-zhao-projects/immutable-infrastructure-using-packer-ansible-and-terraform-a275aa6e9ff7

Accessory tools for "scaffolding"

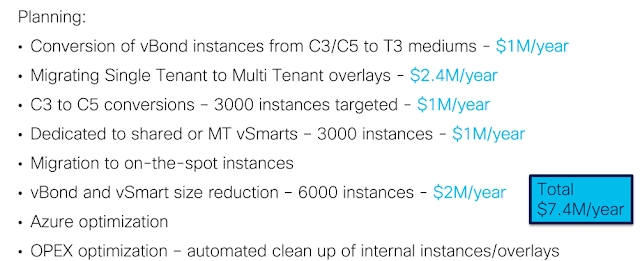

The automation you can build with these tools is amazing, and it saves you time and troubles (sometimes also money as a consequence). As a single individual, or part of a small team, you are much more productive thanks to the reuse of scripts and blueprints, to less troubleshooting required, to higher speed in provisioning.

When the size of the operations team, or of the organization made of different teams, grows beyond a handful of people, some coordination issues start being visible.

- If many people use the same scripts (playbooks, configuration plans, etc.) those resources need to be accessible in a central repository (generally a VCS) and you need to enforce RBAC (role based access control) to protect them.

- Credentials to access the target systems cannot be stored within the code in the VCS, so you need to store them separately and pass them in as variables.

- If a change is pushed to the environment, people need to be notified (even more if a pipeline fails and someone has to fix it).

- Bespoke IaC pipelines can stretch across personal machines or shared VMs creating a management nightmare

- Terraform state files contains sensitive information which requires special handling and access control

So you start defining processes to work in a ordered manner, and adopting accessory tools to store the secrets (one example is Vault, to store credentials in a safe, centralized place). The governance work and the tools you start accumulating are defined scaffolding, and rapidly become such a burden that they exceed the advantages you've got from adopting the Infrastructure as Code approach (this happens only at a large scale and if you don't have experienced staff).

A solution for this problem is offered by the enterprise version of the tools (both Ansible and Terraform), that is also offered as a SaaS option. The paid versions - that are also supported by the vendors - offer everything you need for large teams' collaboration and avoid that you need to invest in creating all the operational framework.

I'm not saying that you absolutely need those versions, but consider that the miracles an engineer can do with the free, single binary file, local setup of the automation tools are less likely to be seen on a larger team scale when the IaC best practices are broadly adopted. There will be an inflection point where the benefits provided by the enterprise edition justify the cost of the solution.