Hybrid Cloud is a must nowadays, I will not spend a word to convince you (you’d not be reading this post if you didn’t believe it). This is the story of a real project.

The structure of the post is:

- Motivation

- Use Cases

- Time

- Software Stack

- Benefit of the architecture we implemented

- Lessons Learned (the most important part)

Motivation for hybrid cloud, and most of the work in my customers' projects, include the following areas:

- Cost control (there is a strong debate: some swear it’s cheaper, others have discovered hidden costs: e.g. network traffic in production, after they made a business case just on the cost of VM provisioning).

- Governance model (IT must find a way to maintain control over resources usage, design patterns, compliance and security when application developers chose private cloud or public cloud).

- Mature technical solution: architecture and technology (there are many good products and system integrators in the market)

But, once you have made a decision, what will you run in the hybrid cloud?

Will your applications be spread across the boundary of your datacenter (one tier inside, other tiers outside)?

Or can we say that it is rather a multi-cloud deployment, where you have a number of resource pools that you can use as a target for deployments?

This project was made by a large corporation, to test how a hybrid cloud can be built and operated and to verify the impact on their current organization.

It is not a full production environment, it’s a pilot project that demonstrated on a small scale how easily you can build a software defined fully automated data center, including both resource pools from your local data center(s) and from public cloud providers.

The solution is expected to be cost effective, of course, but the greater benefits come from business agility and consistent governance.

Use Cases:

The evaluation was focused on 3 main use cases, all requiring that end users order the deployment of a complex software stack from a service catalog: the target for the deployment can be either the private cloud or the public cloud, or a combination of the two. These are the areas where the implementation demonstrated the value of the multi-cloud solution:

- Business Intelligence (self-deployment of R Studio and additional tools)

- ETL (self-deployment of a common software for ETL that data scientists would use in autonomy)

- High Performance Computing (HPC) on OpenStack, with the integration of a DevOps pipeline.

Subject matter experts were provided from different lines of business in the company to support the implementation activities and evaluate the result.

The use cases represent some frequent activities that the company needs in their usual business, especially in R&D. Improving efficiency and quality in the associated processes will have an impact on the overall business outcome. Applications were selected for the self service catalog that are deployed frequently (every week) and whose installation process takes time (some man days, accounting for both infrastructure and software setup), delaying business objectives.

Time:

All the activities in the project were delivered in time (six weeks), including the setup of the hardware and software systems for the hybrid cloud, the implementation of the 3 main use cases and some additional use cases, the functional tests and the stress tests. This is a demonstration that a proper selection of the technology and a good organization of the project allow for immediate return.

Challenges like setup of the remote access to the lab for remote experts, constraints in the networking and security configuration in the lab, some missing information about the process to install the applications (essential to build the model for the automation) slowed down the implementation. See Lessons learned.

Software Stack:

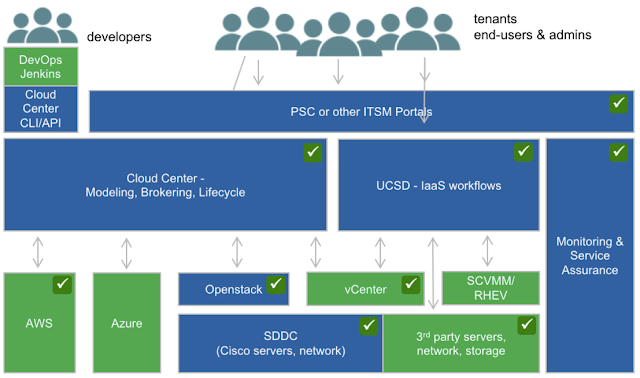

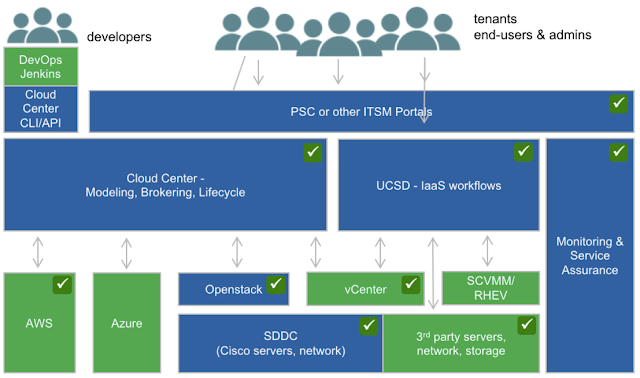

This

is a complete end to end solution: its adoption will happen

with a phased approach, starting from the components that grant an easy

and immediate impact on the most critical business requirements and

adopting some non-functional components later to complete the

architecture. The extension from private cloud (based on any combination

of VMware, other hypervisors and OpenStack) to a hybrid cloud

(integrating AWS, Azure and more) was very quick

(it is just a matter of configuration and definition of the governance model). Checkmarks in the picture show what we realized in the short timeframe of the project. The rest is part of a phased plan. The blue boxes show the components provided by Cisco.

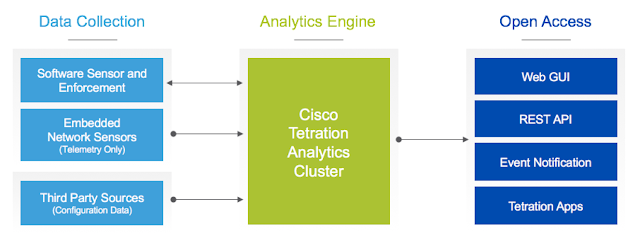

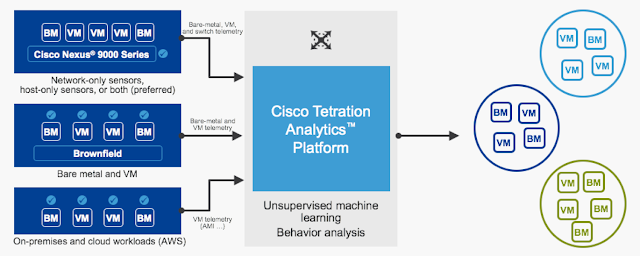

The fundamental component in this architecture is Cisco CloudCenter (CCC), that has 2 main roles:

- providing an orchestration solution that offers users the possibility to self-deploy complex software stacks from blueprints offered in a catalog,

- brokering cloud resources from both private and public clouds (in the project we integrated VMware, OpenStack and AWS, but more clouds are supported).

CloudCenter manages the lifecycle of software applications in the cloud (at a level of abstraction where the underlying physical infrastructure does not matter).

The OpenStack use cases for HPC are supported by a Cisco Validated Design named UCSO: it includes a reference architecture for running the Red Hat OSP8 distribution on a certified hardware platform made of Cisco UCS servers and Nexus 9000 switches. The setup process and the operations are defined by the official deployment guide and Cisco's technical support assumes responsibility on the entire stack, including the Red Hat software.

The

management of the entire DC infrastructure from a single orchestration

platform was made possible by Cisco UCSD (UCS Director): a single

dashboard and workflow engine to manage servers, network and storage,

both physical and virtual. The status, the performances and the

remaining capacity of all the systems were monitored with Cisco UCSPM (UCS Performance Manager).

Benefits of the architecture we implemented

The implementation of the multi-cloud solution demonstrated the major benefits that a hybrid cloud delivers.

A consistent architecture based on software (and eventually hardware) components that integrate easily and satisfy all the business and technical requirements.

All components in the architecture are loosely coupled and their integration is based on standard protocols and documented open API. As a consequence, every component can be replaced by an alternative solution (from a different vendor, from the open source, from a custom build) with no fear of vendor lock in.

The adoption of a hybrid cloud solution can happen gradually, starting with a core implementation with the most critical components (e.g. CCC, ACI and UCSO), adding more features as a second step (infrastructure automation and monitoring) with UCSD and UCSPM, eventually a unified service catalog and ITSM portal later.

Lessons Learned:

- use cases

- network topology

- security and trust

- reusable work (repositories and services)

- engage SME and business owners

- document

- refine (iterations, devops)

Use Cases

The selection of the use cases is important. You need a quick return to demonstrate the value of the hybrid cloud: the adoption of the hybrid model should address immediate business needs, that the end users can appreciate, rather than be driven just by an industry trend.

IT projects should not start because a new technology is very smart, but because the outcome makes the business easier and more productive.

Always engage your end users in the planning phase and avoid academic use cases that have a limited appeal on the decision makers. In this project we were lucky because the preparation was done by the steering committee very well in advance.

Once the models for the automation were ready, we could test any combination of the deployment for the application tiers: everything in the private cloud, everything in the public cloud, or the front end deployed on one side and the back end on the other side. The benchmarking capabilities of the product (CCC) allowed to compare the price/performances ratio of the different options based on vSphere, Openstack and AWS - specifically for each application, with tailored reporting.

Network Topology

A hybrid environment connects - by definition - areas that were designed separately (your datacenter and the public cloud). They have security policies and configurations that are not meant to work together, and this makes it difficult. Before you start the setup, dedicate the right time to collect all the requirements and to design the connectivity properly.

We had some issues with the network proxies and the firewalls because of the protocols and ports that we needed to open to allow a proper integration of the Cloud Management Platform (running on premise) with the orchestration engine (with one instance running in each cloud region used in the project, to leverage the local API exposed by the cloud provider and to manage the lifecycle of the applications in the cloud).

Another important requirement is to have a unique repository for all the artifacts, the blueprints and the installation packages for the applications: it should be reachable from all the target clouds that you plan to use, regardless its location (it can be either in the private or in the public cloud, but all the servers you deploy will access it to stand up a new instance of the application).

The same applies to any public repository that is used in the setup of the applications (both commercial software and open source components, e.g. packages installed using yum).

See also CCC Components Overview for more detail.

Security and Trust

It's important that a good level of trust is established between the architects building the hybrid cloud and the operations team, especially the security guys. Special rules and new policies need to be setup to allow the new platform to work, it's impossible to keep the same old governance model that addresses a single end user identity.

Sometimes I feel like I'm living - again - the same conflict that I had with Database Administrators, when I tried to configure JDBC database connection pools in the first Java application servers in the 80's. The system should be trusted, and a delegation of the decisions (authentication, authorization and audit) accepted.

Reusable work (repositories and services)

When you model a software application to automate its deployment, you should identify any building block that can potentially be reused in a different model. If you create a reusable (parametric) deliverable and save it individually in a common repository, next time you'll have the work ready to be reused.

This applies to architectural building blocks like database servers, web servers, load balancers, firewalls, distributed caches, etc.

If they have been created as separate services, instead of just being a part of a monolithic model, they will appear in your designer's palette everytime you model a similar application and you can drag and drop them in the topology. We did that in the project and we saved a lot of time in the implementation.

Engage SME and business owners

It is important that subject matter experts (SME) collaborate at the definition of blueprints and the build of the automation model. Even though documentation exists for the deployment of the application, you should work together.

The user knows all the requirements, he knows how to verify and troubleshoot, he has encountered all the setup issues already.

I've learned that the best way to document the setup process for an application, so that you can use it as a reference for the automation, is to ask the SME to install it in front of you in a clean environment where the application was never run, and record a video of the process. It's faster than writing documentation, more complete and reliable. We did that using the desktop sharing feature in Webex and we recorded the sessions.

Document

While you do the work, keep track of all the steps. Take (maybe informal) notes, but mostly take a lot of screen shots to document what you did. You can keep them on a wiki or on a shared folder, they will help a lot when you have time to create the formal documentation of the project. If you need to troubleshoot, eventually involving other people, this information will be unvaluable.

Of course, versioning and taking snapshots of all deliverables also helps in case you need to go back for whatever reason.

Refine (iterations, devops)

Create the implementation for a minimum viable product (MVP) as soon as you can. Get the product (i.e. the entire self service catalog, or just the implementation of a single application blueprint) to early customers as soon as possible, to get their feedback before you go too deep in the implementation.

Applied to a hybrid cloud scenario, this will help to evaluate:

- quality of the service you are building, including documentation

- how much the users need it and use it in the real world

- performances of the distributed environment and any bottleneck (network, computing, configuration)

- security implications

You will have all the time to make it perfect, through iterations that improve the implementation, collect feedback, allow for tuning the design and the configuration. No need to work in a hurry and make mistakes, while you keep your users waiting for the final "perfect" product but they don't see any progress.