The value of automation in the DataCenter

Everyone is aware of the value of the automation.Many companies and individual engineers implemented various ways to save time, from shell scripts to complex programs and to fully automated IaaS solutions.

It helps reducing the so called "Shadow IT", a phenomenon that happens when developers can't get a fast enough response from the IT of the company and rush to the public cloud to get what they need. Doing that they complete and release their project soon, but sometimes troubles start with the production phase of the deployment (unexpected additional budget for the IT, new technologies that they are not ready to manage, etc.).

|

| shadow IT happens when corporate IT is not fast enough |

For sure, some departments are organized in silos (a team responsible for servers, one for storage, one for networking, one for virtual machines, of course one for security...) and the provisioning of even simple requests takes too long.

|

| process inefficiency due to silos and wait time |

Pressure on the infrastructure managers

So there is inefficiency in the company, that affects the business outcome of every project.Longer time to market for strategic initiatives, higher costs for infrastructure and people.

Finger pointing starts, to identify who is responsible for the bottleneck.

The efficiency of teams and individuals is questioned, and responsibility is cascaded through the organization from project managers to developers, to the server team, to the storage team and generally the network is at the end of the chain... so that they have no one else to blame.

Those on the top (they consider themselves on top of the value chain) believe - or try to demonstrate - that their work is slowed down by the inefficiency of the teams they depend on. They try to suggest solutions like: "you said that your infrastructure is programmable, now give me your API and I will create everything I need on demand".

Of course this approach could bring some value (not much, as we'll see in the rest of the post) but it mines the relevance of the specialists teams that are supposed to manage the infrastructure according to best practices, to apply architectural blueprints that have been optimized for the company's specific business, to know the technology in deeper detail.

So they can't accept to be bypassed by a bunch of developers that want to corrupt the system playing with precious assets with their dirty hands.

The definitive question is: who owns the automation?

Should it be left to people that know what they need (e.g. Developers)?

Should it be owned by people that know how technology works, and at the end of the day are responsible for the SLA including performances, security and reliability that could be affected by a configuration made by others (i.e. IT Administrators)?

In my opinion, and based on the experience shared with many customers, the second answer is the correct one.

By definition the developer is not an expert on security: if he can easily program a switch via its REST API to get a network segment, it’s not the same when traffic needs to be secured and inspected.

|

| The IT Admin patrolling the infrastructure |

Offering a self service catalog (or API)

A first, immediate solution could be the introduction of an easy automation tool like Cisco UCS Director, that manages almost every element in a multi vendor Data Center infrastructure: from servers to networks to storage to virtualization in a single dashboard. But what is more interesting is that every atomic action you do in the GUI is also reflected in a task in the automation library, that allows you to create custom workflows lining all the tasks for a process that you want to automate.A common example of automation workflow is the creation of a 4-hypervisors server farm.

A single workflow starts from the SAN storage creating a volume and 4 LUN, where the hypervisor will be installed to enable remote boot for the servers. Then a network is created (or the existing management network will be used) and 4 Service Profiles (the definition of a server in Cisco UCS) are created from a template, with individual ip address, mac address and wwn for each network interface. Then, zoning and masking are executed to map every new server to a specific LUN and the service profiles are associated to 4 available servers (either blades or rack mount servers). The hypervisors are installed using the PXE boot, writing the bytes in the remote storage, configured and customized, and finally added to a (new) cluster in the hypervisor manager (e.g. vCenter).

All this process takes less then one hour: you could launch it and go to lunch, when you're back you'll find the cluster up and running. Compare it to a manual provisioning of the same server farm, eventually performed by a number of different teams (see the picture above): it would take days, sometimes weeks.

Other use cases are simpler: maybe just creating a 3 tier application with VM and dedicated networks.

Once the automation workflow has been built and validated, it can be used by the IT admin or by the Operations everyday, to save time and ensure consistent outcome (no manual errors). But it can also be offered as a service to all the departments that depend on the IT for their projects.

You can build a service catalog with enterprise features: multitenancy, role based access control, reporting, chargeback, approvals, etc. But you can also offer (secured) access to the API to launch the workflow, offering a degree of autonomy to your consumers. Eventually, using a resource quota: you don’t want everyone to be able to create dozens of VMs every hour if the capacity of the system can't sustain it.

They will appreciate the efficiency improvement, for sure.

What's in it for me?

If you allow your internal clients to self serve, you will:

- get less requests for trivial tasks, that consume time and give no satisfaction (let them play with it),

- be the hero of the productivity increase (no requests pending in your queue)

- dedicate your time and skill to designing the architectural blueprint that will be offered as a service to your clients (so that everybody plays according to your rules)

- use policy based provisioning, so that you define the rules just once and map them to tenants and environments: every deployment will inherit them

- maintain control on resource consumption and system capacity, hence on costs and budget

- increase your relevance: they will come to you to discuss their needs, propose new services, collaborate in governance

Example: network provisioning

The discussion above is valid for the entire infrastructure in the Data Center.

Now I tell you the story of a customer that implemented it specifically for the networking.

They were influenced by the trend about SDN and initially they were caught in the marketing trap "SDN means software implemented networking, hence overlay". Then they realized the advantage provided by ACI and selected it as the SDN platform ("software defined networking", thanks to the software controller and the ACI policy model).

Developers and the Architecture department asked to access the API exposed to self provision what they needed for new projects, but this was seen as an invasion of the property (see the picture with the dirty hands).

It would have worked, but it implied a transfer of knowledge and delegation of responsibility on a critical asset. At the end of the day, if developers and software designers had knowledge in networking, specialists would not exist.

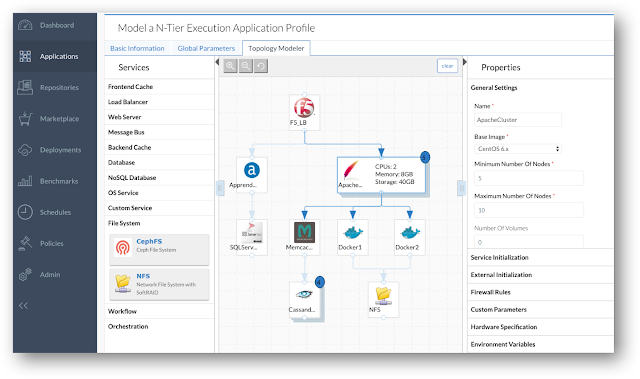

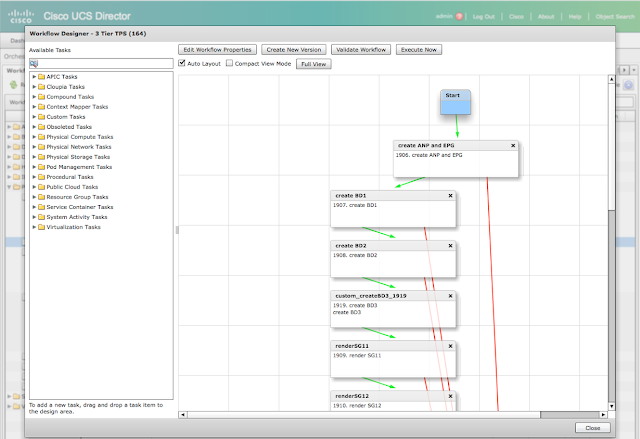

So the network admins built a number of workflows in UCS Director, using the hundreds of tasks offered by the automation library, to implement some use cases ranging from basic tasks (allow this VM to be reached from the DMZ) to more complex scenarios (create a new environment for a multi tier application including load balancer and firewall configuration, plus access from the monitoring tools, with a single request).

|

| Blueprint designed in collaboration with Security and Software Architects |

|

| Graphical Editor for the workflow |

These workflows are offered in a web portal (a service catalog is offered by UCSD out of the box) and through the REST API exposed by UCSD. Sample calls were provided to consumers as python clients, powershell clients and Postman collections, so that the higher level orchestration tool maintained by the Architecture dept was able to invoke the workflows immediately, inserting them in the business process automation that was already in place.

|

| Example of python client running a UCSD workflow |

All the executions of the workflows - launched through the self service catalog or through the REST API - are tracked in the system and the administrator can inspect the requests and their outcome:

|

| The Service Requests are audited and can be inspected and rolled back |

|

| The Admin has full control (see the tabs in the window) |

References

Cisco UCS DirectorCisco ACI

ACI for Simple Minds

ACI for (Smarter) Simple Minds

Invoking UCS Director Workflows via the Northbound API