Here I elaborate on the advantage provided by Cisco ACI (and some more projects in the open source space) when you work with containers.

Policies and Containers

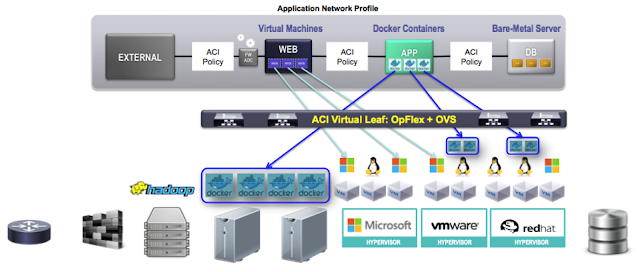

Cisco ACI offers a common policy model for managing IT operations.It is agnostic: bare metal, virtual machines, and containers are treated the same, offering a unified policy language: one clear security model, regardless of how an application is deployed.

ACI models how components of an application interact, including their requirements for connectivity, security, quality of service (e.g. reserved bandwidth for a specific service), and network services. ACI offers a clear path to migrate existing workloads towards container-based environments without any changes to the network policy, thanks to two main technologies:

- ACI Policy Model and OpFlex

- Open vSwitch (OVS)

OpFlex is a distributed policy protocol that allows application-centric policies to be enforced within a virtual switch such as OVS.

Each container can attach to an OVS bridge, just as a virtual machine would, and the OpFlex agent helps ensure that the appropriate policy is established within OVS (because it's able to communicate with the Controller, bidirectionally).

The result of this integration is the ability to build and manage a complete infrastructure that spans across physical, virtual, and container-based environments.

Cisco plans to release support for ACI with OpFlex, OVS, and containers before the end of 2015.

Value added from Cisco ACI to containers

I will explain how ACI supports the main two networking types in Docker: veth and macvlan.This can be done already, because it's not based only on Opflex.

Containers Networking option 1 - veth

vEth is the networking mode that leads to virtual bridging with br0 (a linux bridge, the default option with Docker) or OVS (Open Virtual Switch, usually adopted with KVM and Openstack).As a premise, I remind you that ACI manages the connectivity and the policies for bare metal servers, VMs on any hypervisor and network services (LB, FW, etc.) consistently and easily:

On top of that, you can add containers running on bare metal Linux servers or inside virtual machines (different use cases make one of the options preferred, but from a ACI standpoint it's the same):

That

means that applications (and the policies enabling them) can span

across any platform: servers, VM, containers at the same time. Every

service or component that makes up the application can be deployed on the platform that is more convenient for it in terms of scalability, reliability and management:

And

the extension of the ACI fabric to virtual networks (with the

Opflex-enabled OVS switch) allows applying the policies to any virtual

End Point that uses virtual ethernet, like Docker containers configured

with the veth mode.

Advantages from ACI with Docker veth:

With this architecture we can get two main results:- Consistency of connectivity and services policy between physical, virtual and/or container (LXC and Docker);

- Abstraction of the end-to-end network policy for location independence altogether with Docker portability (via shared repositories)

Containers Networking option 2 - macvlan

MACVLAN does not bring a network bridge for the ethernet side of a Docker container to connect.You can think MACVLAN as a hypotetical cable where one side is the eth0 at the Docker and the other side is the interface on the physical switch / ACI leaf.

The hook between them is the VLAN (or the trunked VLAN) in between.

In short, when specifying a VLAN with the MACVLAN, you tell a container binding on eth0 on Linux to use the VLAN XX (defined as access or trunked).

The connectivity will be “done” when the match happens with the other side of the cable at the VLAN XX on the switch (access or trunk).

At this point you can match vlans with EPG (End Point Groups) in ACI, to build policies that group containers as End Points needing the same treatment, i.e. applying Contracts to the groups of Containers:

Advantages from ACI with Docker macvlan:

This configuration provides two advantages (the first one is common to veth):- Extend the Docker containers based portability for applications through the independence of ACI policies from the server's location.

- Performance increase on network throughput from 5% to 15% (mileage varies, further tuning and tests will provide more detail) because there’s no virtual switching consuming CPU cycles on the host.

Intent based approach

A new intent based approach is making its way in networking. An intent based interface enables a controller to manage and direct network services and network resources based on describing the “Intent” for network behaviors. Intents are described to the controller through a generalized and abstracted policy semantics, instead of using Openflow-like flow rules. The Intent based interface allows for a descriptive way to get what is desired from the infrastructure, unlike the current SDN interfaces which are based on describing how to provide different services. This interface will accommodate orchestration services and network and business oriented SDN applications, including OpenStack Neutron, Service Function Chaining, and Group Based Policy.Docker plugins for networks and volumes

Cisco is working at a open source project that aims at enabling intent-based configuration for both networking and volumes. This will exploit the full ACI potential in terms of defining the system behavior via policies, but will work also with non-ACI solutions.Contiv netplugin is a generic network plugin for Docker, designed to handle networking use cases in clustered multi-host systems.

It's still work in progress, detail can't be shared at this time but... stay tuned to see how Cisco is leading also in the open source world.

Mantl: a Stack to manage Microservices

And, just for you to know, another project that Cisco is delivering is targeted at the lifecycle and the orchestration of microservices.Mantl has been developed in house, as a framework to manage the cloud services offered by Cisco. It can be used by everyone for free under the Apache License.

You can download Mantl from github and see the documentation here.

Mantl allows teams to run their services on any cloud provider. This includes bare metal services, OpenStack, AWS, Vagrant and GCE. Mantl uses tools that are industry-standard in the DevOp community, including Marathon, Mesos, Kubernetes, Docker, Vault and more.

Each layer of Mantl’s stack allows for a unified, cohesive pipeline between support, managing Mesos or Kubernetes clusters during a peak workload, or starting new VMs with Terraform. Whether you are scaling up by adding new VMs in preparation for launch, or deploying multiple nodes on a cluster, Mantl allows for you to work with every piece of your DevOps stack in a central location, without backtracking to debug or recompile code to ensure that the microservices you need will function when you need them to.

When working in a container-based DevOps environment, having the right microservices can be the difference between success and failure on a daily basis. Through the Marathon UI, one can stop and restart clusters, kill sick nodes, and manage scaling during peak hours. With Mantl, adding more VMs for QA testing or live use is simple with Terraform — without one needing to piece together code to ensure that both pieces work well together without errors. Addressing microservice conflicts can severely impact productivity. Mantl cuts down time spent working through conflicts with microservices so DevOps can spend more time working on an application.

Key takeaways

ACI offers a seamless policy framework for application connectivity for VM, physical hosts and containers.ACI integrates Docker without requiring gateways (otherwise required if you build the overlay from within the host) so Virtual and Physical can be merged in the deployment of a single application.

Intent based configuration makes networking easier. Plugins for enabling Docker to intent based configuration and integration with SDN solutions are coming fast.

Microservices are a key component of cloud native applications. Their lifecycle can be complicated, but tools are emerging to orchestrate it end to end. Cisco Mantl is a complete solution for this need and is available for free on github.

References

Much of the information has been taken from the following sources.You can refer to them for a deeper investigation of the subject:

https://docs.docker.com/userguide/

https://docs.docker.com/articles/security/

https://docs.docker.com/articles/networking/

http://www.dedoimedo.com/computers/docker-networking.html

https://mesosphere.github.io/presentations/mug-ericsson-2014/

Exploring Opportunities: Containers and OpenStack

ACI for Simple Minds

http://www.networkworld.com/article/2981630/data-center/containers-key-as-cisco-looks-to-open-data-center-os.html

http://blogs.cisco.com/datacenter/docker-and-the-rise-of-microservices

ACI and Containers white paper

Cisco and Red Hat white paper

Opendaylight and intent

Intent As The Common Interface to Network Resources

Mantl Introduces Microservices as a Stack

Project mantl

Another great post, Luca. Thank you for taking the time to do the research and share your thoughts. The intersection of Opflex enabling policy application by on Container services is definitely a feather in the ACI cap and something to watch as things develop

ReplyDeleteGreat post on Devops docker and cisco aci.Two posts with illustrative screenshots and very useful for DevOps docker training taking people.

ReplyDeleteIts great content in your blog on devops. informative information on your blog.thank you for sharing. Devops Online Training

ReplyDelete